LambdaCube 3D

Purely Functional Rendering Engine

A few thoughts on geometry shaders

Posted by on June 21, 2013

We just added a new example to the LambdaCube repository, which shows off cube map based reflections. Reflections are rendered by sampling a cube map, which is created by rendering the world from the centre of the reflecting object in six directions. This is done in a single pass, using a geometry shader to replicate every incoming triangle six times. Here is the final result:

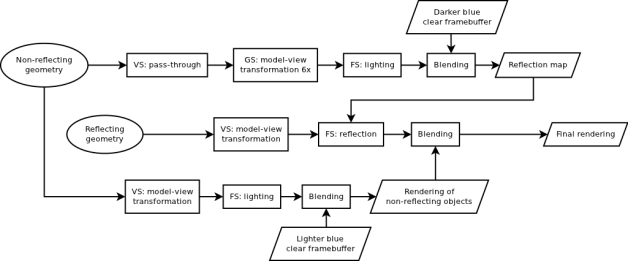

While the main focus of this blog is language and API design, we need to describe the pipeline structure of the example to put the rest of the discussion into context. The high-level structure corresponds to the following data-flow graph:

The most important observation is that several pieces of this graph are reused multiple times. For instance, all geometry goes through the model-view transformation, but sometimes this is performed in a vertex shader (VS), sometimes in a geometry shader (GS). Also, the same lighting equation is used when creating the reflection map as well as the non-reflective parts of the final rendering, so the corresponding fragment shader (FS) is shared.

The Good

For us, the most important result of writing this example was that we could express all the above mentioned instances of shared logic in a straightforward way. The high-level graph structure is captured by the top declarations in sceneRender’s definition:

sceneRender = Accumulate accCtx PassAll reflectFrag (Rasterize rastCtx reflectPrims) directRender where directRender = Accumulate accCtx PassAll frag (Rasterize rastCtx directPrims) clearBuf cubeMapRender = Accumulate accCtx PassAll frag (Rasterize rastCtx cubePrims) clearBuf6 accCtx = AccumulationContext Nothing (DepthOp Less True :. ColorOp NoBlending (one' :: V4B) :. ZT) rastCtx = triangleCtx { ctxCullMode = CullFront CCW } clearBuf = FrameBuffer (DepthImage n1 1000 :. ColorImage n1 (V4 0.1 0.2 0.6 1) :. ZT) clearBuf6 = FrameBuffer (DepthImage n6 1000 :. ColorImage n6 (V4 0.05 0.1 0.3 1) :. ZT) worldInput = Fetch "geometrySlot" Triangles (IV3F "position", IV3F "normal") reflectInput = Fetch "reflectSlot" Triangles (IV3F "position", IV3F "normal") directPrims = Transform directVert worldInput cubePrims = Reassemble geom (Transform cubeMapVert worldInput) reflectPrims = Transform directVert reflectInput

The top-level definition describes the last pass, which draws the reflective capsule – whose geometry is carried by the primitive stream reflectPrims – on top of the image emitted by a previous pass called directRender. The two preceding passes render the scene without the capsule (worldInput) on a screen-sized framebuffer as well as the cube map. We can see that the pipeline section generating the cube map has a reassemble phase, which corresponds to the geometry shader. Note that these two passes have no data dependencies between each other, so they can be executed in any order by the back-end.

It’s clear to see how the same fragment shader is used in the first two passes. The more interesting story is finding a way to express the model-view transformation in one place and use it both in directVert and geom. As it turns out, we can simply extract the common functionality and give it a name. The function we get this way is frequency agnostic, which is reflected in its type:

transformGeometry :: Exp f V4F -> Exp f V3F -> Exp f M44F -> (Exp f V4F, Exp f V4F, Exp f V3F) transformGeometry localPos localNormal viewMatrix = (viewPos, worldPos, worldNormal) where worldPos = modelMatrix @*. localPos viewPos = viewMatrix @*. worldPos worldNormal = normalize' (v4v3 (modelMatrix @*. n3v4 localNormal))

The simpler use case is directVert, which simply wraps the above functionality in a vertex shader:

directVert :: Exp V (V3F, V3F) -> VertexOut () (V3F, V3F, V3F) directVert attr = VertexOut viewPos (floatV 1) ZT (Smooth (v4v3 worldPos) :. Smooth worldNormal :. Flat viewCameraPosition :. ZT) where (localPos, localNormal) = untup2 attr (viewPos, worldPos, worldNormal) = transformGeometry (v3v4 localPos) localNormal viewCameraMatrix

As for the geometry shader…

The Bad

… we already mentioned in the introduction of our functional pipeline model that we aren’t happy with the current way of expressing geometry shaders. The current approach is a very direct mapping of two nested for loops as an initialisation function and two state transformers – essentially unfold kernels. The outer loop is responsible for one primitive per iteration, while the inner loop emits the individual vertices. Without further ado, here’s the geometry shader needed by the example:

geom :: GeometryShader Triangle Triangle () () 6 V3F (V3F, V3F, V3F) geom = GeometryShader n6 TrianglesOutput 18 init prim vert where init attr = tup2 (primInit, intG 6) where primInit = tup2 (intG 0, attr) prim primState = tup5 (layer, layer, primState', vertInit, intG 3) where (layer, attr) = untup2 primState primState' = tup2 (layer @+ intG 1, attr) vertInit = tup3 (intG 0, viewMatrix, attr) viewMatrix = indexG (map cubeCameraMatrix [1..6]) layer vert vertState = GeometryOut vertState' viewPos pointSize ZT (Smooth (v4v3 worldPos) :. Smooth worldNormal :. Flat cubeCameraPosition :. ZT) where (index, viewMatrix, attr) = untup3 vertState vertState' = tup3 (index @+ intG 1, viewMatrix, attr) (attr0, attr1, attr2) = untup3 attr (localPos, pointSize, _, localNormal) = untup4 (indexG [attr0, attr1, attr2] index) (viewPos, worldPos, worldNormal) = transformGeometry localPos localNormal viewMatrix

The init function’s sole job is to define the initial state and iteration count of the outer loop. The initial state is just a loop counter set to zero plus the input of the shader in a single tuple called attr, while the iteration count is 6. The prim function takes care of increasing this counter, specifying the layer for the primitive (equal to the counter), and picking the appropriate view matrix from one of six uniforms. It defines the iteration count (3, since we’re drawing triangles) and the initial state of the inner loop, which contains another counter set at zero, the chosen view matrix, and the attribute tuple. Finally, the vert function calculates the output attributes using transformGeometry, and also its next state, which only differs from the current one in having the counter incremented.

On one hand, we had success in reusing the common logic between different shader stages by simply extracting it as a pure function. On the other, it is obvious at this point that directly mapping imperative loops results in really awkward code. At least it does the job!

The Next Step?

We’ve been thinking about alternative ways to model geometry shaders that would allow a more convenient and ‘natural’ manner of expressing our intent. One option we’ve considered lately would be to have the shader yield a list of lists. This would allow us to use scoping to access attributes in the inner loop instead of having to pass them around explicitly, not to mention doing away with explicit loop counters altogether. We could use existing techniques to generate imperative code, e.g. stream fusion. However, it is an open question how we could introduce lists or some similar structure in the language without disrupting other parts, keeping the use of the new feature appropriately constrained. One thing is clear: there has to be a better way.

Your pipeline diagram doesn’t allow reflective objects to see other reflective objects. I know this would cause a loop but that’s what other renderers allow up to some number of bounces.

Of course not, but that’s simply because it’s not needed by this example. It would be straightforward to extend the pipeline to handle several reflective objects, all we need is more passes and render textures.